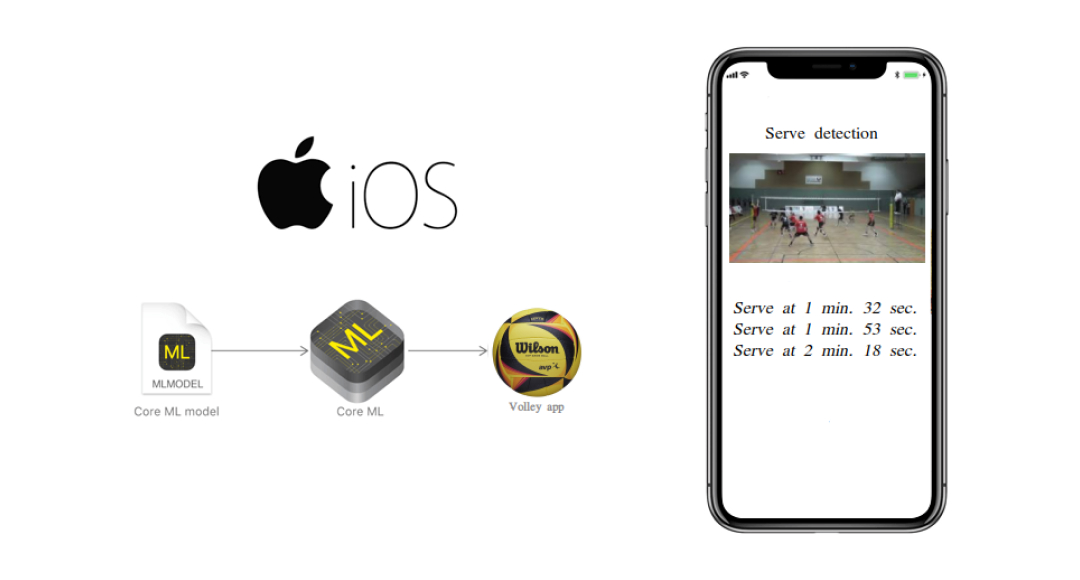

Volleyball Serve Detection on iPhone 12 Using Core ML

Machine learning and artificial intelligence solutions have propelled mobile app development to a new level. Applications with the integration of machine learning technologies can successfully recognize and classify images, human voices, and actions, conduct text recognition from images, and translate them. This list can easily go on. However, the main problem for engineers remains to transfer huge models with millions of connections to a mobile phone without losing processing speed, and, crucially – quality still remains.

Key advantages of ML on mobile devices

Let’s have a look at the main advantages of ML models implemented on mobile devices:

1) Near real-time processing. There is no need to make API calls sending the data and waiting until the model provides a result for a response. This can be a critical point for applications that are working with video processing of the stream from the on-device camera.

2) Availability offline. The need to be connected to any network to work with this kind of application is gone.

3) Privacy. Your data never leaves the device. This means you don’t need to send your data anywhere to be processed. All computations are performed on the device.

4) Low cost. The application runs without a network connection, and there are no API calls. This allows you to use the application everywhere without any ridge constraints.

The main disadvantages of ML on mobile devices

Even though machine learning on mobile devices looks promising, it has its downsides.

1) Application size. Adding a machine learning model to the mobile application significantly increases the size of this application.

2) System utilization. Prediction on the mobile device involves plenty of computational resources that can increase battery consumption.

3) Model training. Usually, the model that works on a mobile device should be trained outside of this device. The problem also duplicates in cases when we need to re-train our models.

The Apple team has created a framework called Core ML that is aimed at solving problems that can arise during the development of mobile applications containing machine learning models. The tool is created for machine learning model integration on mobile devices and is used all across Apple products to provide fast predictions with easy integration of pre-trained models. This allows you to develop applications that work in real-time with live images or videos on Apple devices.

Main possibilities of Core ML

1) Real-Time Image Recognition

2) Face Detection

3) Text Prediction

4) Speaker Identification

5) Action classification

6) Predictions on tabular data

7) Different custom models

How Does it Work?

Core ML uses a machine learning model that was trained somewhere outside (your local computer or cloud platform, etc.) and then converted to Core ML suitable format. To develop the application, including the source code creation and model integration, IDE for Apple-compatible platforms is used and is called Xcode.

How to train models for Core ML?

To simplify the model development phase you can build and train your model with the Create ML app. This tool is already bundled with Xcode. Models trained using Create ML are in the Core ML suitable (.mlmodel) format and are ready to use in your app.

Create ML supports five different categories of models:

1) Image – classification and detection models

2) Sound – classification

3) Motion – classification

4) Text – classification and tagging

5) Table – classification, regression and recommendation models.

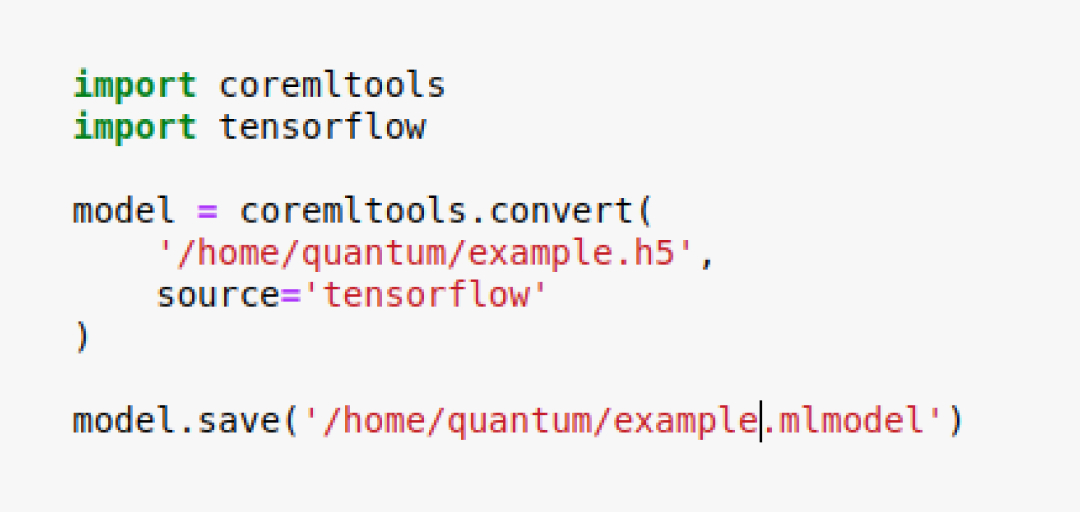

An alternative way to train models for Core ML is to use coremltools – a python package used for creating and testing models in the .mlmodel format. For now, it can be used to address the following tasks:

– Convert trained models from popular machine learning tools into Core ML format (.mlmodel)

– Making predictions using the Core ML framework.

To install the package using pip: pip install coremltools. This short code example demonstrates how to convert tf.keras model into .mlmodel format suitable for Core ML. We used version 4.0 of coremltools in our project.

Task definition

In our research, we tried to solve one typical problem for sports analytics – action detection. The main condition – the model should be integrated into iPhone 12. We selected volleyball as the targeted sport and “a serve” as the targeted action. To simplify the problem, we assume that serves from both sides of the court should be classified as a serve. As the dataset for training, we’ve used the public Volleyball Activity. Dataset that contains seven challenging volleyball activity classes annotated in six videos from professionals in the Austrian Volley League (season 2011/12). We also extended this dataset by 25 videos collected by ourselves.

The main challenge is that sometimes we can’t see the player who does the serve (he’s behind the camera). So the model should work well enough for both visible and hidden serves.

What we tried on Create ML

Action Classifier

Our first assumption was that ActionClassifier from createML should resolve our problem. We were inspired by some tutorials on using it. Just for a brief overview, you can check this video from the official page.

In short, the Action Classifier takes a window of sets of body key points (usually 19) as an input and provides a class of action as an output. The window size is usually up to the developer, but we take a window size equal to 30 frames (about 1-second duration) for our problem.

During the experiments, we faced the issue that Action Classifier works perfectly with situations when only one person is present on the screen. We didn’t find a good way to work with multi-person action classification using Apple’s MLActionClassifier that’s why our research in this field was not successful.

Image Classifier

Our second approach was to use another tool from createML – ImageClassifier. As mentioned earlier, we had a dataset with visible and invisible serves, so we decided to try event classification instead of action classification. In other words, we planned to classify images according to the positions of all players. In the case of serve it’s not difficult – all players except one stand near the net and try to hide serve from the opponents. The opposing team also has a strong pattern – all of the players are static and prepare to receive the ball.

it’s really easy to train an Image Classification model in CreateML – you don’t need to write any lines of code. The algorithm is the following:

1) Organize train dataset directory. Every class of images should be located in a specific directory.

2) Organize test dataset directory. Do the same as for the training set.

3) Create ImageClassification project.

4) Configure training set, set up augmentations and set the number of epochs.

5) Wait some time depending on the number of images, their size and some other parameters.

6) Save the model and use it in the XCode project.

However, we have also faced some issues on this step, namely:

– The accuracy of the image classifier was not high enough. We obtained about 70% accuracy on the serve detection.

– Time to train the model is really high – more than 10 hours on Macbook using a dataset with about 15000 samples.

How did we solve these problems?

We decided to continue with the classification problem but for now we prepared a dataset where each sample is a set of 30 sequential grayscale frames. The output as usual – label of class. After the training of the model using tf.keras framework we converted it to .mlmodel. The final score was about 0.87 (f1-score).

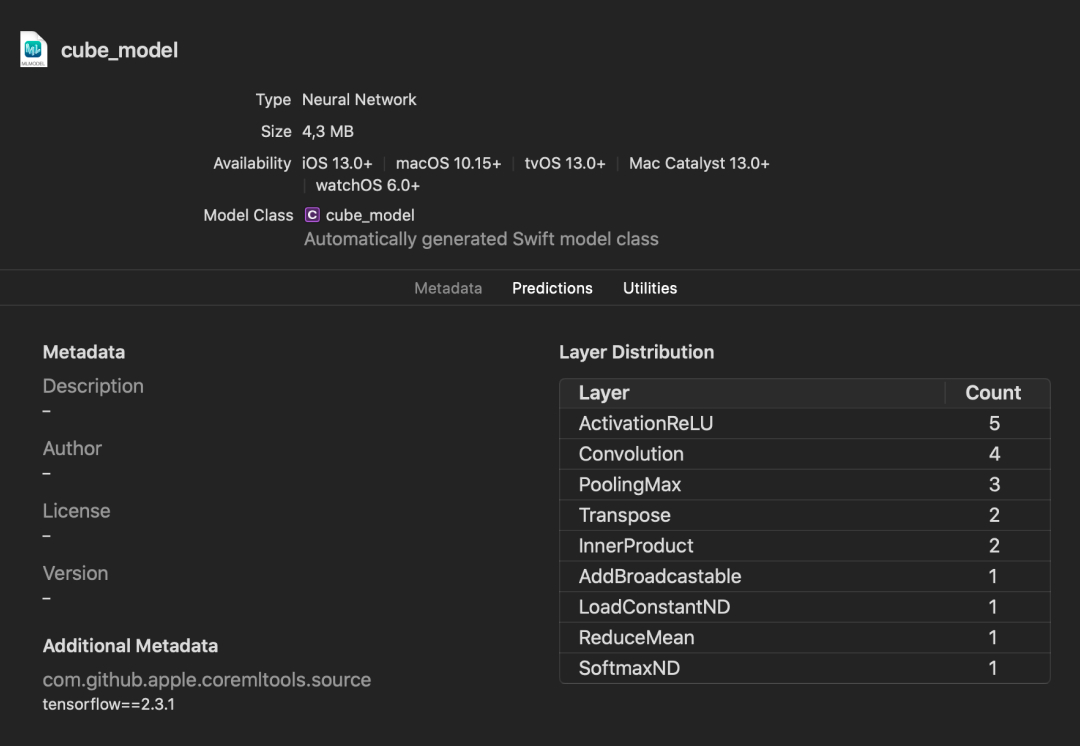

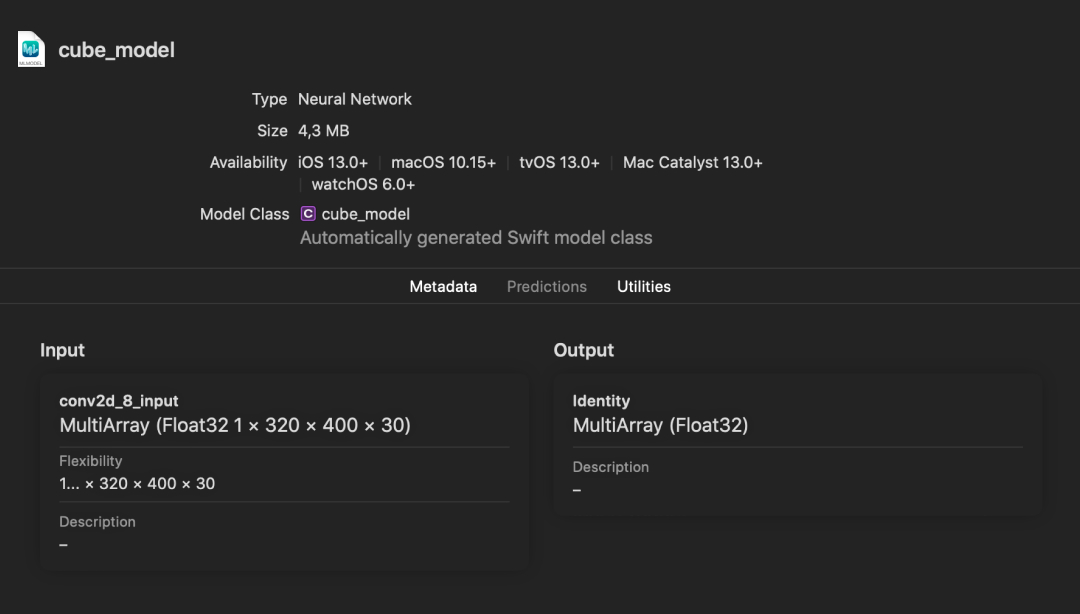

How to integrate .mlmodel into the project?

As soon as you have trained .mlmodel it’s really easy to integrate it into your Xcode project – you just need to copy it into the project’s directory. If you click into the file with a model you will see the window with all available information about it (see screenshots below).

On the first tab, you can see the information about layers inside your neural network.

On the second tab you can check the input and output data types and dimensions.

Also one more useful thing – you can click on “Automatically generated Swift model class” and see all definitions, inputs and outputs for your model generated for a class. This information should help you with the integration of the model into the code.

Conclusions

Core ML is a really powerful tool that allows you to integrate models of any complexity into your mobile devices. To simplify the process of integration, the Apple team developed the CreateML framework, which allows one to create different ML models with minimum code writing. However, the project that we did showed that CreateML is not suitable for all tasks. In our case, where we did research in the field of serve detection in volleyball, we were not able to obtain good results using this framework. In general, we couldn’t implement an action classifier for multiple people and an image classifier with a high enough score using the CreateML framework.

As a result, we applied another approach using tf.keras with further converting to the .mlmodel. Thus, the model for serve detection on volleyball games works in real-time speed (26 FPS) with the F1-score near 87%.