What is geospatial MLOps and do you need one?

Michael Yushchuk

Head of Data Science

The market demand for MLOps continues to grow dramatically, making it the new black in the IT industry. Businesses are widely using ML models to keep up with competitors in tackling large-scale business problems and pursuing tangible benefits. Although only a few seem to understand Machine Learning Operations and why abandoning these practices leads to significant performance losses. According to a report by Algorithmia, “2020 State of Enterprise Machine Learning“, many companies still haven’t achieved their ML/AI goals.

In this article, we will use real-life examples to break down the term “Machine Learning Operations” and explain the need for MLOps, especially talking about geospatial analysis.

Why is everyone so obsessed with MLOps?

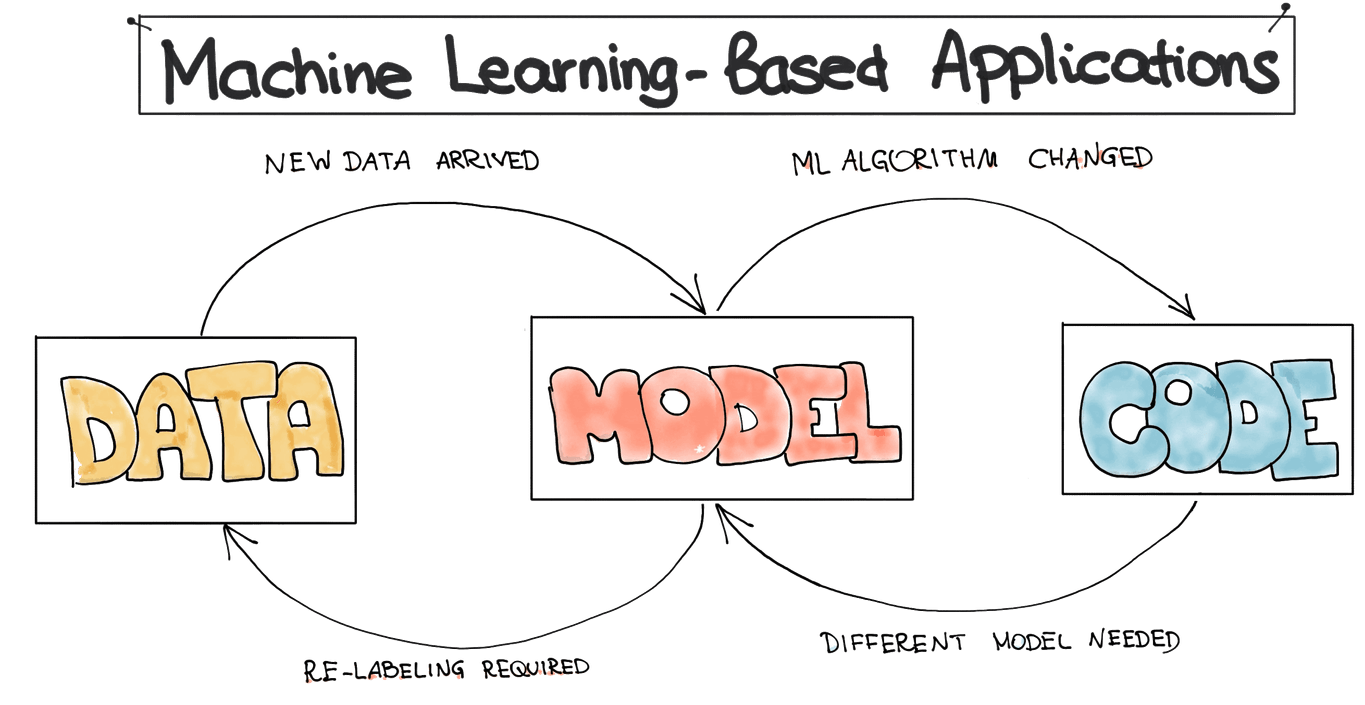

One critical challenge in machine learning is creating a system to continuously evaluate, maintain, integrate, and update ML models to ensure compatibility with the required data and software environment in which these models run. Many data scientists spend most of their time preparing, validating, and structuring data, running multiple integration tests, and managing infrastructure rather than doing data science. To better grasp the issue, let’s have a closer look at the ML development process itself.

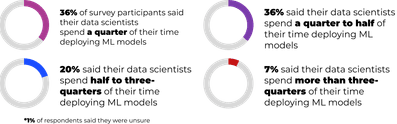

Survey: What percentage of your data scientists’ time is spent deploying ML models?

Model creation, delivery, and maintenance – ML Operations – are often separated during ML development, resulting in significant time loss. And that’s precisely the case for applying MLOps, thus avoiding holdups and optimizing the entire ML lifecycle. But what is MLOps anyway?

MLOps, or Machine Learning Operations – is a sequence of practices aiming to automate ML model lifecycle in a reliable and efficient way. These practices unify and combine ML development, training, testing, deployment, monitoring, and management into a single automated mechanism.

In simple terms, MLOps provides the necessary tools for model orchestration, defines the roles and responsibilities of each particular ML process, and ensures continuous delivery and integration of ML models into large-scale production environments. This approach avoids widespread obstacles throughout development and better aligns ML models with business needs and regulatory requirements.

Why does it matter?

Only 22% of companies using machine learning have successfully deployed an ML model into production

Turning a model into an actual business asset takes more than just creating it. To actually profit from the ML model, we need to incorporate a trained ML model into production, making it available to the core system.

Based on a new Gartner survey, less than half of AI projects make it to production, while the rest never leave the PoC stage. The reason for this is a huge number of issues arising during development, such as:

- ML models don’t fit business needs in terms of revenue, customer retention, etc. why? → This might happen due to the lack of groundwork, choosing the wrong ML technique, or simply if business objectives don’t overlap much by utilizing machine learning.

- High dependency on data quality: since we’re running ML models on data, they are sensitive to incoming data semantics, amount, and completeness. Some data could be missing, changed, or weren’t enough, and the issue remained unforeseen during the training. In this case, it affects the relations between input and output variables, causing the model performance degradation in production (model decay). thus →

- The model lifecycle proved tough to process, and the operational costs turned out to be higher than expected. As software systems become more complex, organizations find that in-house development doesn’t make much sense. hence →

- Data locality. For instance, transferring ML models pre-trained on different user demographics to new customer segments might not work correctly according to quality metrics.

Unfortunately, the MLOps isn’t a magic pill and can’t handle all the rapidly emerging challenges. Yet its main purpose is to overcome the most prevalent model lifecycle issues.

Three representative scenarios where implementing MLOps is mandatory:

- Scaling ML Operations in large production environments: the more requests you get, the more data you need to retrieve. Imagine your business grew rapidly, and your models aren’t at the best speed to scale and operate with tons of user requests – you need MLOps to cope instantly with the intense load and maintain the system infrastructure.

- Dealing with sensitive data and regulatory requirements. Using ML obliges companies’ responsibility and compliance with legal requirements, such as GDPR, NIST, or HIPAA. Now, they require well-established processes to control access, track interactions with ML models and their results and document the foundation of the entire ML lifecycle. MLOps adoption ensures the compliance of ML systems with legal requirements by providing the appropriate infrastructure for model governance implementation.

- Aligning ML to business needs at scale. It is not unusual for a business objective to change during project development, thus requiring instant changes to the machine learning algorithm for model training.

MLOps in geospatial analysis

Over the years, the concept of “geospatial” has made a decisive breakthrough in development. The times of geospatial data associated only with GIS and GPS have passed, and now “geospatial” isn’t mainly about maps. It’s about the data and insights we can obtain, for instance, with predictive analytics, as we do with the Geo Analytics Platform – Quantum’s platform for AI-powered geospatial analytics.

As part of data analytics, predictive analytics combines historical data analysis, statistical modeling and machine learning to produce predictions on future outcomes. Depending on past and current satellite data, organizations can forecast meaningful insights, such as estimated carbon emissions, illegal logging, weather predictions, and many more. Read more about geospatial analytics and its benefits among various industries.

However, geospatial analysis usually requires massive amounts of data, making data processing challenging or even impossible without AI and machine learning, running ML tasks of regression, classification, and clustering in spatial data. Working with satellite data has challenges, but spending hours passing images through processes like collecting, cleaning, transformation, enhancement, segmentation, and classification is very time-consuming. Since the data is constantly updating, it also requires running all of these operations over and over again – there is a need to automate the model training pipeline.

Leveraging geospatial MLOps in automating geospatial data pipeline has a bunch of benefits:

- Reduces personal involvement in repeatable processes several times by automating the initial steps of the training process.

- Makes model outputs more predictable. Since the ML model requires continuous training, the series of past events helps to learn model patterns and predict future results.

- The opportunity to make more accurate predictions also contributes to model reliability.

- Enhances model-risk management. Constant model training on large datasets identifies the behavior patterns, thus allowing to manage possible risks efficiently.

What will happen to a GIS project without MLOps?

First, it’s necessary to note that not every project firmly demands MLOps implementation at all. For example, small R&D projects that last just a few months don’t need MLOps since the project is short-term.

In contrast, long-term production GIS projects processing vast amounts of data and operating numerous ML models require automation with MLOps in some form to ensure continuous model training to avoid model drift and degradation.

Otherwise, data scientists have to spend a long time (which could have been avoided) on model observation, management, and maintenance. Consequently, big businesses may experience significant analysis delays and risk losing current and potential customers. Based on the experience of the large agricultural company we have worked with, the MLOps adoption shortened their time for development and operational processes three times.

Saving time and costs with the Geo Analytics platform

Quantum’s Geo Analytics platform (GeoAP) – is an AI-powered solution providing a component set that efficiently processes satellite and drone imagery, reducing development time and saving the budget for geoanalytics solution development by up to 60%. GeoAP is an easy-to-use product with a solution for satellite imagery downloading, the ability to deploy/execute your ML models or existing ones and visualize the insight on the map. It also includes dashboards for model lifecycle management and experiment tracking that automate model governance.

Scalability with Kubernetes

Usually, we store our ML models in the storage, managing and executing them in Jupyter Notebooks by request. Eventually, this capacity wasn’t enough. With the need to scale and handle massive satellite/drone data without time and performance losses, we adopted Kubernetes to build scalable and efficient pipelines and provide the best customer experience. Such a system is instrumental in ML pipelines since it can run several models in parallel without loss in performance.

To introduce the flexibility of microservices to machine learning pipelines, Kubernetes has four capabilities: scalability, GPU support, data management, and infrastructure abstraction. In this way, Kubernetes meets numerous computational challenges by orchestrating workflow through containers and allows data scientists scalable access to CPUs and GPUs that automatically increase when the computation requires a spike of activity and scales back down when the task is finished.

Model orchestration – geospatial pipelines

Using an automated model orchestrator in GeoAP, we address the architectural challenge and eliminate numerous manual processes in deploying and scaling. An orchestrator automates the process of configuring, deploying, managing, and monitoring containerized pipelines of different scales. Acting as an operator, it manages the container lifecycle to provide high availability with load balancing and fault recovery by pre-defined rules and conditions.

Geoanalytics

Turn satellite, drone, GPS, and other data into geoanalytics that identify when and where your greatest opportunities and risks are most likely to emerge.

Check the service